Artificial Intelligence Likely to Use More Energy, and Spread More Climate Misinformation

6 Mins Read

That artificial intelligence (AI) can help solve the climate crisis is a misguided notion – instead, the tech will likely cause rises in energy use and fuel the rapid spread of climate misinformation, according to a new report.

“AI has a really major role in addressing climate change.” Google’s chief sustainability officer, Kate Brandt, made this statement in December, calling the tech a turning point for climate progress. It followed a report released by the tech behemoth a month earlier, which found that AI could cut global emissions by 10% – as much as all of the EU’s carbon pollution – by 2030.

But climate activists say it’s not so simple, casting doubt over the ability of AI programmes like Google’s Gemini (formerly Bard) and OpenAI’s ChatGPT to truly curb the ecological crisis. “We seem to be hearing all the time that AI can save the planet, but we shouldn’t be believing this hype,” says Michael Khoo, climate disinformation program director at Friends of the Earth.

The organisation is part of the Climate Action Against Disinformation coalition, which has put out a new report questioning AI’s true impact on climate change. It warns that the technology will lead to a rise in water and energy use from data centres, and proliferate falsehoods and misinformation about climate science.

“It’s not like AI is ridding us of the internal combustion engine. People will be outraged to see how much more energy is being consumed by AI in the coming years, as well as how it will flood the zone with disinformation about climate change,” notes Khoo.

AI’s true impact on energy use and climate change

There are tons of instances where AI is being used as a tool to fight climate change, whether that’s detecting floods or deforestation in real time, analysing crop imagery to find pest or disease problems, and performing tasks humans may not be able to, such as collecting data from the Arctic when it’s too cold or conducting research in oceans. Scientists can also use the tech to improve climate pattern modelling, conserve water, fight wildfires, recover recyclables, and reduce business emissions. Planet FWD, ClimateAI and CarbonBright are just a few examples of startups leveraging AI to help companies lower their climate impact.

However, the problem with using AI to reduce your carbon footprint is that AI itself has a massive carbon footprint. Data centres run 24/7, and, since most use fossil fuel energy, they are said to account for 2.5-3.7% of global GHG emissions, with one estimate forecasting that data centres and communication tech are on track to make up 14% of emissions by 2040.

The report points out how the tech industry has acknowledged the massive energy and water consumption of AI. Google’s chairman has said that each new AI search query needs 10 times the energy costs of a traditional Google search, while OpenAI CEO Sam Altman has stated that it will use vastly more energy than people expected. The International Energy Agency predicts that energy use from AI data centres will double in the next two years. Plus, they consume large amounts of water and are often located in areas with water scarcity.

Take ChatGPT, one of the most popular AI tools on the internet. Training the chatbot used as much energy as 120 American homes over a year, while training the more advanced GPT-4 model consumed~ 40 times more energy. Meanwhile, data centres require a lot of water to generate said electricity, as well as to cool down computing systems. The US Department of Energy estimates that the country’s data centres consumed 1.7 billion litres per day in 2014 – 0.14% of the nation’s daily water use.

“There is no basis to believe AI’s presence will reduce energy use, all the evidence indicates it will massively increase energy use due to all the new data centres,” says Khoo. “We know there will be small gains in efficiency in data centres, but the simple math is that carbon emissions will go up.”

How AI spreads climate misinformation

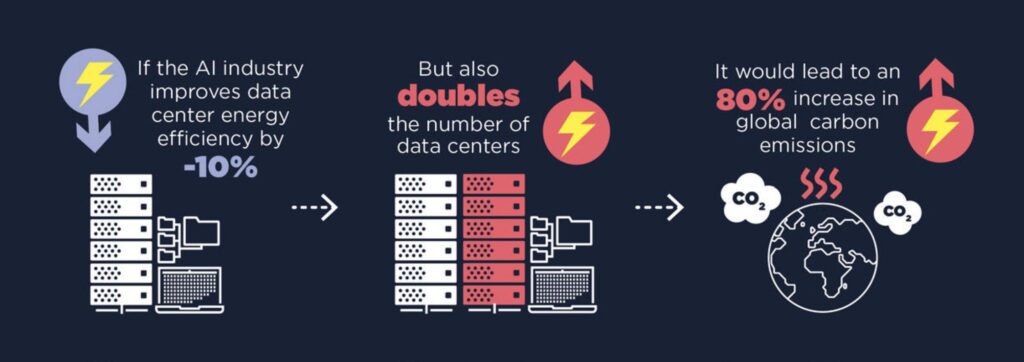

There is a lack of transparency when it comes to the reporting of AI’s true impact. For instance, if the AI industry improves data centre energy efficiency by 10%, but all the while doubling the number of centres themselves, it would lead to an 80% increase in global carbon emissions. And this is where misinformation comes in.

The report argues that generative AI can allow climate deniers – that’s 15% of Americans – to develop influential false content more easily, cheaply and rapidly, and spread it across social media and search engines. The World Economic Forum has identified AI-generated misinformation and disinformation as the world’s greatest threat, followed by climate change. The tech has enabled the fossil fuel industry to spread climate denial, with certain campaigns falsely blaming wind power as a cause of whale deaths in New Jersey or power outages in Texas.

“AI will only continue this trend as more tailored content is produced and AI algorithms amplify it,” the report notes. Platforms like Twitter/X already saw climate disinformation triple in 2022, and the authors suggest tech businesses have little incentive to stop this, given companies such as Google make about $13.4M a year from climate denier accounts.

“We can see AI fracturing the information ecosystem just as we need it to pull it back together,” Khoo said. “AI is perfect for flooding the zone for quick, cheaply produced crap. You can easily see how it will be a tool for climate disinformation. We will see people micro-targeted with climate disinformation content in a sort of relentless way.”

What companies and governments need to do

Climate Action Against Disinformation pinpoints three core principles of better AI development in its recommendations. The first, transparency, urges regulators to ensure AI companies publicly disclose their full-cycle emissions and energy use, explain how these models produce information and measure climate change accuracy, and provide data access to advertisers to ensure they don’t monetise content conflicting with their policies.

The second involves safety, with the report suggesting that companies need to demonstrate that their products are safe for humans and the climate, ensure how they’re safeguarded against discrimination, bias and misinformation, and enforce their community guidelines as well as disinformation and monetisation policies. Governments, meanwhile, must develop common AI safety reporting standards and fund studies to understand the effect of AI on energy use and climate disinformation.

Finally, policymakers are also the subject of the third principle, accountability. The report says they need to protect whistleblowers who expose AI safety issues, enforce safety and transparency rules with strong penalties for non-compliance, and hold companies and executives accountable for any harm caused by generative AI.

“The skyrocketing use of electricity and water, combined with its ability to rapidly spread disinformation, makes AI one of the greatest emerging climate threat multipliers,” said Charlie Cray, senior strategist at Greenpeace USA (which is part of the coalition). “Governments and companies must stop pretending that increasing equipment efficiencies and directing AI tools towards weather disaster responses are enough to mitigate AI’s contribution to the climate emergency.”